July 14, 2011

by James Liao

So far, we have released the Pronto’s Xorplus L2/L3 stack and the OVS stack. With the current implementation, users have to choose which stack to run when the switch boots up. While this is good enough for some users, we are constantly getting requests to support a fusion version where users can configure the network with legacy L2/L3 protocols, and dynamically use OpenFlow to direct the traffic.

Since Xorplus is already running on Pronto and OVS is already ported and released, implementation of the stack is not a big challenge. The major challenge we have is the operation model between OpenFlow and L2/L3 stacks.

Given we are proud to provide open network, we decide to publish our proposal here and solicit inputs from the community. If you have any feedback, feel free to leave a comment or send your proposed changes to support@prontosys.net.

Reference Design

HP and NEC have released software that can operate in this type of environment. From HP’s setup guide and NEC’s setup, we can tell both are using VLAN to configure OpenFlow.

The pseudo configuration procedure of both switches is

- create a VLAN

- name the VLAN (e.g. OpenFlow)

- add ports to this VLAN

- set the OpenFlow controller

- enable OpenFlow on this VLAN

- use show command to display the status of OpenFlow instance

- allow lldp protocol to run on the OpenFlow ports (this can be done through configuration or through the instruction from the controller)

Users can set up multiple OpenFlow VLANs on each switch, and each VLAN can be connected to different controller. It is possible to add a port to multiple OpenFlow VLANs, each managed by different controller.

[Note] Does this really work? Theoretically this should work, but in real implementation OpenFlow is using different MAC learning (manual insertion) from the legacy L2/L3 network (auto learning). Having two mode running on the same port might be difficult to implement.

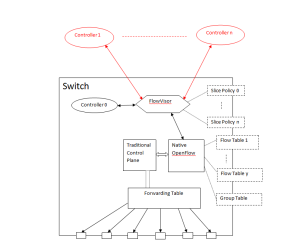

Pronto Design

While we like the idea of using VLAN to partition the legacy switching ports from OpenFlow ports, we see the need of having OpenFlow ports sharing the data planes with the legacy switch ports. This requires the legacy ports to use the same “tables” (L2 MAC, L3 FIB, or ACL) as the OpenFlow ports.

In this case, we will provide two types of OpenFlow configuration. One is OpenFlow port configuration, and the other as OpenFlow VLAN configuration.

1. Port (including port channel) configuration.

We will add an attribute, OpenFlow, in the port configuration. When this is enabled, the port is operated in OpenFlow mode, and will use the port VLAN configuration.

When configured into OpenFlow mode, this port, by default, should drop all packets until the flow entries are inserted by the controller. The spanning tree protocol should be automatically disabled.

By default, the OpenFlow port should be running at default VLAN. This means the frames of this port can be forwarded to other ports at the same switch, even if those ports are “non-openflow ports”.

2. Per VLAN configuration.

Just like HP and NEC switches, it helps to have two separate virtual switches, one running legacy protocols, while the other(s) running OpenFlow. In the per-VLAN OpenFlow configuration, each OpenFlow VLAN can be configured with its own controller. Like we mentioned earlier, the behaviors of the chips might be different and the implementation might be tricky.

OpenFlow Configuration

OVS has a well integrated config server to handle its configuration. In Pronto’s integration, we want to keep that configuration as part of OVS, instead of integrating it into Xorplus configuration database.

Trouble Shooting OpenFlow

OVS is built on top of Linux, and leverages Linux network tools, such as tcpdump, for trouble shooting. Since Xorplus is also built on top of Linux, all these tools should be still available to OVS.

Sustaining Protocols

While OpenFlow does not require most of the legacy L2/L3 switch protocols, some protocols are still useful to the OpenFlow ports. For example, the LLDP protocol can still provide link status to the port management. In these cases, we want to keep these protocols available in the OpenFlow ports.

Feedback Appreciated

We love to hear your application case and your feedback on the design case. More specifically, some answers to the following questions would really help.

1. Do we need per VLAN configuration? Per-port configuration seems to fulfill the requirements.

[Note] Based on the feedback from Jim Chen of Northwestern University, Matt Davy of Indiana University, and Srini Seetharaman of Stanford, per-VLAN OpenFlow configuration (with multiple OpenFlow instances) is particularly useful in an environment where network control is distributed between several parties.

2. Do we need to pass through any other protocol, besides LLDP, through OpenFlow?

[Note] LLDP seems to be the only required protocol for now. Not sure about LACP yet.